Good afternoon.

I would like to clarify the following doubts about the Ignition docker image:

-

Is it possible to run the image twice on the same machine to test for redundancy? My idea is to configure one instance as Master and another as Backup, just for testing purposes.

I’ve tried, changing the HTTP port in the environment variables of the second container, but I can’t connect to the Gateway.

-

I would like to know if running Ignition as a docker image is a safe and stable option for a production environment of 50000 tags (read frequency: 1 second) in a machine with 8 cores and 32 GB RAM.

Best Regards.

@Alberto_AG Thank you for reaching out--I can help you with these questions.

Question 1 on running multiple containers

Definitely possible to run multiple containers on the same machine; in fact, this is one of the most exciting capabilities of running Ignition on Docker. When you launch an Ignition container on Docker, you're typically going to "publish" one/more ports. This involves defining a port on the host that will forward to a port in the container. You'll want to probably leave the port in the container as the defaults, and adjust the port on your host computer. Whenever I want to run multiple containers on Docker, I first reach for Docker Compose. This allows a definition of the containers I want to launch versus having to run individual commands to control the various lifecycles. See an example Compose YAML file below:

# Dual Gateway Docker Compose Example

---

services:

frontend:

image: inductiveautomation/ignition:8.1.17

ports:

# Left-hand side is the port on your host OS, right-hand is the container port

- 9088:8088

volumes:

- frontend-data:/usr/local/bin/ignition/data

environment:

GATEWAY_ADMIN_PASSWORD: changeme

IGNITION_EDITION: standard

ACCEPT_IGNITION_EULA: "Y"

command: >

-n Ignition-frontend

-m 1024

backend:

image: inductiveautomation/ignition:8.1.17

ports:

- 9089:8088

volumes:

- backend-data:/usr/local/bin/ignition/data

environment:

GATEWAY_ADMIN_PASSWORD: changeme

IGNITION_EDITION: standard

ACCEPT_IGNITION_EULA: "Y"

command: >

-n Ignition-backend

-m 1024

volumes:

frontend-data:

backend-data:

Placing this docker-compose.yml file into a folder (named for the "stack" that you're creating) and launching it should bring up both of your containers. See a demo here: IA Forum 60494 - asciinema.org

Question 2 on Running in Production

TL;DR: There really isn't a performance penalty of running Ignition in a container, but there are other aspects that might have folks still leaning towards a traditional installation for production at the moment. Definitely leverage Docker though for your development and simulation/test environments--it can be a great tool.

A container is simply an application running with some sandbox constraints on your host OS. In fact, when you view a list of processes on your host system, you'll see processes from your containers running there alongside everything else (that isn't containerized). That said, from a raw performance perspective, there isn't much difference between running Ignition in a Docker container on a Linux host and running Ignition via a traditional installation on that same Linux host. I should mention that running containers in production definitely means running on a Linux host (and not Docker Desktop that you might leverage in development atop Windows/macOS/Linux; you've got some extra layering there that wouldn't be recommended for production use). The other aspect that is important to be aware of is that licensing of Ignition will be easily tripped by updating your containers with a new base image--this is due to how Docker works. Past that, other considerations for running a production application on a Linux host probably apply atop other container best-practices.

There's obviously a lot more depth to this topic, but hopefully the above provides you with some useful insights.

6 Likes

One other thing I can offer that might help is a recent stream I also did on building out a multi-gateway Docker Compose stack… Feel free to check that out on my (unofficial!  ) Twitch channel: https://www.twitch.tv/videos/1315400429

) Twitch channel: https://www.twitch.tv/videos/1315400429

3 Likes

Hello.

Thanks for quick response.

I have created the file “compose” as you indicate and I have executed it. I can seamlessly connect to the Frontend Gateway, but I can’t connect to the Backend Gateway.

Best Regards.

What do you get with the following?

You’re looking for running (healthy) status for both “services” in the “stack”. Alternatively, you can try http://localhost:9089 as the URL in the event something isn’t working quite right in your DNS config, both should work (*.vcap.me is just a convenient wildcard DNS resolver that resolves to 127.0.0.1 (localhost) for anything you put in front of it).

Good morning.

Yes, I know that “frontend.vcap.me” and “backend.vcap.me” is the same as “localhost”.

The status of the two containers is “healthy”:

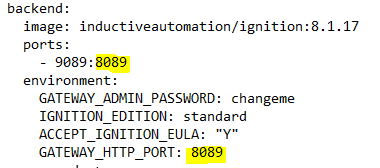

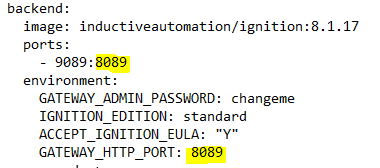

I don’t understand why I can’t connect to the Gateway of the backend container (port 9089). I’ve even modified the compose file to force the HTTP port to change, but it doesn’t work that way either.

I’m using Windows 10, but that shouldn’t be an inconvenience, right?

Best Regards.

It definitely sounds like there is something strange in your environment. We never really covered your environment setup in the previous portions of the thread. If you’re on Windows 10, I’m presuming you’re using Docker Desktop? If so, what version? Also, are you using the WSL2 based engine (from the General settings under configuration)? At this point, it is time to look at your Docker setup.

Good afternoon.

Yes, I’m using Docker Desktop.

The version is v.4.9.0:

I’m using WSL 2 based engine:

On the other hand, in the compose file I changed the ports to 10000 and 11000 respectively. Now the result is worse. The status of the backend container is “unhealthy”:

Best Regards.

Please share your current Compose YAML…

EDIT: Also, just so we’re clear on troubleshooting steps, you might try the following:

# Bring down Compose stack and clear volumes (will remove gateway state/data)

docker compose down -v

# Bring back up fresh (in detached mode)

docker compose up -d

I’m wondering if you’ve gotten your gateway in a strange state and it might be best to remove your volumes and bring it up fresh.

1 Like

FWIW, I think what probably happened in your particular case was that you:

- Launched the Compose solution.

- Updated the

backend service with GATEWAY_HTTP_PORT=8089 and changed the port publish configuration.

- Updated the

backend service again to omit that environment variable and changed your port publish once again (this time back to container port of 8088).

When you removed the env var, the existing setting in the data/gateway.xml stayed at the last directed setting (e.g. 8089). Omitting GATEWAY_HTTP_PORT env var doesn’t revert any configuration that might still be in your gateway state (i.e. the data volume), it just doesn’t drive it each time on startup. At this point, the container was still starting on port 8089. You could probably verify this with something like:

That said, resetting (via the docker compose down -v) and re-launching should put you in a good state.

2 Likes

Good afternoon.

The last problem (“unhealthy”) was fixed with the command you indicated: docker compose down -v.

I also managed to fix the first problem (now I see that it is something clear and obvious): port 9089 is being used on my PC, I do not know why:

Now I can connect with the two Gateways (containers) by ports 10000 and 11000 respectively:

Frontend:

Backend:

@kcollins1 you are the best. Thank you very much.

1 Like

Just one more question: In Windows, where is the path of frontend-data and backend-data volumes?

When you’re in Windows/macOS and running Docker Desktop, the location of named volumes is a bit tricky. Named volumes are managed by Docker, and usually, you don’t directly interact with them from outside, at the host OS level. However, they are just files/folders on the filesystem:

You’d be right to recognize the path of that volume as something that isn’t on your Windows system. It is located on the under-the-hood Linux VM that is being managed by Docker Desktop. If you want to explore the contents of that volume (and perhaps the associated Ignition container as well), one tool that I enjoy is VSCode’s Docker extension. It makes it pretty easy to attach to a container and explore the filesystem (including mounted volumes) and more.

Lot’s of potential divergence here depending on what you’re actually wanting to do…

Other than the following:

- Requesting a specific license key: which is the same cost, so not a huge issue

- The container needing an internet connection: likely not a problem on cloud containers, but on-prem potentially

- It not being any performance gain or loss: that's not exactly true, since if you are running MULTIPLE gateways you see a performance benefit with reducing the number of OS' you need to run and manage.

- Containers being "new and scary" in the industrial world: Not always a good excuse

What else would you say might cause others to lean this direction?

You’ve actually captured the relevant thoughts. Licensing has some caveats, and your two bullet points describe them well. As to the performance gain/loss I was trying to distill things down to comparing running Ignition in a container versus on the host (on Linux). Definitely some gains to be had if you’re currently running gateways segregated via virtual machines.

1 Like

What about a windows host vs linux container? Any performance thoughts there?

I have a personal preference towards Linux systems, but I haven’t done much formal benchmarking from an Ignition application perspective (perhaps there are some other IA folks floating around who have).

The one thing I can say is that the fastest Gateway cold start (with automated commissioning) I’ve ever seen has been via aarch64 Docker container (so running on a Linux ARM64 VM via Docker Desktop) on my personal 14" rMBP (M1 Pro) at 11 seconds.

4 Likes

@kcollins1 do you still recommend only running Ignition containers on Linux hosts in a production environment? We are curious about dockerizing our Ignition gateways but all of our servers are running Windows.

Docker (Engine) on Windows is only available via Docker Desktop, which means it's still not suitable for production.

6 Likes