Hello,

I had a queryHistory() method that I was using in Ignition 8.3.1 that was querying 1000 tags which had a response time of approximately 2s, and I have upgraded my Ignition to 8.3.2 which caused the same method to have a response time that was approximately 10s and more.

I had been calling this method using 1000 tags that are historized with metadata=true and being in discrete mode.

I am using the queryHistory() like this:

queryHistory(queryParams, tagHistoryDataset)

where queryParams is:

new BasicTagHistoryQueryParams(

tagPaths,

new Date(startTime),

new Date(endTime),

1,

TimeUnits.SEC,

AggregationMode.Average,

ReturnFormat.Wide,

alias,

Collections.singletonList(AggregationMode.Average)

);

tagPaths is a List, start and end times are long-types spanning 10 minutes from the start time, and alias is a List that is using the string of the equivalent tagPath

I am providing the fully qualified path to tagPaths in the format “histprov:TestHistorian:/sys:ignition-8dc6e43e15ba:/prov:default:/tag:testsine0“.

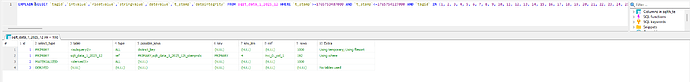

The tagHistoryDataset is:

BasicStreamingDataset tagHistoryDataset = new BasicStreamingDataset();

I am wondering if I have this set up incorrectly (such as missing flags or similar) for 8.3.2 and would like advice or pointers to the correct solution to get a response time within approximately 2s again.

Separately, but in relation to this, it seems that some query calls are being deprecated on the perspective scripting side (8.1 to 8.3 Upgrade Guide | Ignition User Manual,) such as from “system.tag.queryTagHistory” to “system.historian.queryRawPoints”. Is something similar occurring on the SDK side as well?