I am having a persistent issue where my OPC connections all drop at the same time. Currently we have 8 connections, 4 of which will drop simultaneously and have to be manually reconnected (3 are intentionally deactivated and 1 is Ignitions default connection).

I had some issues relating to CPU/RAM capacity that I thought was causing the problem as the CPU usage would easily hit 90%+ usage, but after upgrading the cloud instance the problem persists (though less frequently).

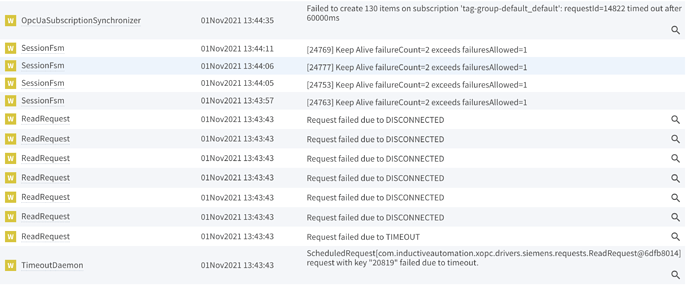

I have logging for some of the OPC related Logger’s turned to DEBUG and capture events like this:

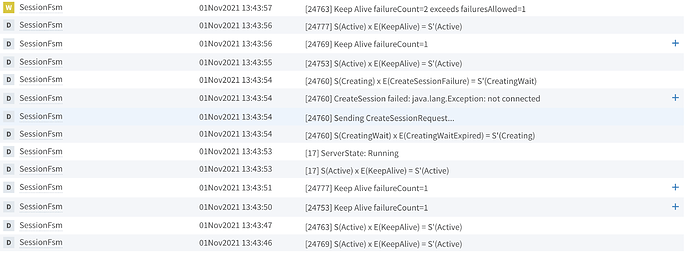

I do have the SessionFsm DEBUG info as well:

Which Logger/Loggers should I change the level of to better capture that event that is causing this? Any help in diagnosing the issue would be. It is occurring with both a Siemens device and multiple Beckhoff devices.

The SessionFsm logger is already a good start. It’s pretty clear that the disconnect is because the keep alive requests stop getting responded to.

A Wireshark capture would be a good supplement if you can disable security for the connections so we can decode the traffic.

What versions of Ignition are you using?

Currently running on 8.1.7

I will work on getting a wireshark capture, that is a good idea.

The complexity of our system (and potential culprit) is we are running our gateway in the cloud and then connecting our local devices via a site-to-site VPN.

The way you’ve described it the obvious first guess is that you have a network problem, not an Ignition problem. The only 4 OPC UA connections that are talking to other hosts on the network all stop responding at the same time? Yeah…

My thinking exactly. There are only 2 things that all 4 connections have in common, the gateway and the network which makes them the obvious suspects.

I think the gateway connection is a bit easier for me to diagnose/troubleshoot which is why I am starting here.

I am capturing the traffic until they fault again. I will report back when they do!

Sometimes it’s once per day other times it’s every hour.

It is really frustrating to diagnose when there is no consistency.

Wireshark (and it’s command-line counterpart tshark) has good support for configuring traffic filters and for capturing into rolling file buffers, which makes it easier to leave a long-running capture running.

1 Like

Good news (or bad since the problem persists) - I was running a Wireshark capture when the connection went down.

The timing of the event somewhat corresponded to pushing a project update to the instance that is running this project. Would there be the possibility that the connection is dropping because of that?

Kevin, can I send the wireshark file to you in a DM?

Sure, try to DM it. If that doesn’t work I can get you a dropbox upload link.

Ah, yeah 4MB limit and the file is 8.3MB. The dropbox link would be great

Can you tell me the IPs and ports involved? I’m not seeing any OPC UA traffic right away. Also not sure what the two different caps are.

The 50.x.x.x:8088 is the Ignition instance while the 10.x.x.x is local traffic.

Importantly, our Ignition instance is hosted in the cloud and all the traffic is routed through a site-to-site VPN.

Sorry, it doesn’t look like you’ve managed to capture any of the traffic we need. I know with my local VPN connection it presents in Wireshark as its own network adapter that I can capture on. Not sure what your setup will look like.

There’s only traffic between 2 IPs in here, a 50.x and a 10.x, and none of it is OPC UA.

Gotcha, I will expand the net and try again! Thanks Kevin

Oops, ignore my reference to captures plural, I had you upload into an existing folder that had an old capture in it already. But what I said above still applies to your capture.