we have one table that is consuming 140gb monthly and growing. I need to know why this type of table grows so large, and is there any way to have a Stored Procedure or something that will normalize the data to hourly chunks at end of month? This is too much detail for historical data and its eating our storage system up. TB every 5-7 months.

Those tables will grow at a rate that is dependent on how you configure your tag history and how many tags you're recording. I would take a look at your settings on all of your tag's history configuration and adjust the sample mode and deadbands to an appropriate value. It sounds like you may be recording anything and everything at a fast rate which is going to result in large amounts of data usage.

If you need to record everything at fast intervals, consider not using the tag historian. Use the SQL Bridge module's transaction groups to record into wide tables instead, at that pace. Space usage will be ½ to ⅓ of what the historian does for such applications.

The tag historian works best when it doesn't have to record every point, but can reconstruct based on the deadband from fewer recorded points. (See the docs for the storage algorithm.)

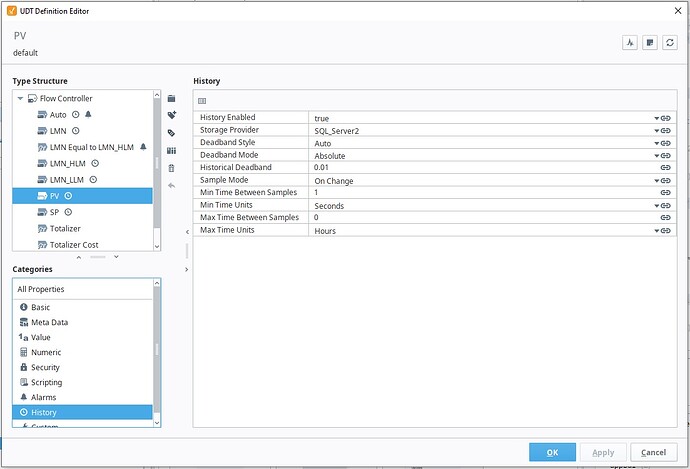

Okay so here are the settings they originally setup. I also included a second photo of their new revised settings they are using today after a call yesterday. Let me know if you have any comments.

Original Setup

Second Setup

Periodic was the problem. It ignores the deadband. Just stores at the sample rate. Makes pretty graphs.

You may need to adjust that deadband. What are the normal range of values for these points. (No need to answer here--just tweak as needed.)

Consider setting a max time between samples of a a minute or two or five. It is helpful when charting if at least one or two real stored points appear on the smallest time range chart you typically use.

You hit the nail on the head pretty graphs. The issue is I really only need to see changes (but we do not know when the changes will occur) so that’s the trick to capture on active change and not miss anything. How or what setting does this tool have to do that? I come from a Rockwell Metrics background, their historian would do the same but we could choose one option and it was on demand change (not sure the actual element name) but it would watch a data point, if it changed it logged the data otherwise no data change no data writes. This kept the db small but still gave you functional graphs/data. They also allowed you to normalize the data by summarizing the data hourly. I am sure this tool can do the same they cannot be that drastically different.

![]()

The adjustment made to "Sample Mode = On Change" will give you similar behavior to what you expect. But Ignition's charting doesn't like big gaps, even if it should be able to reconstruct, which is why I recommend setting "Max Time Between Samples" to a non-zero value (zero meaning infinity).

There's no built-in summarization functionality.